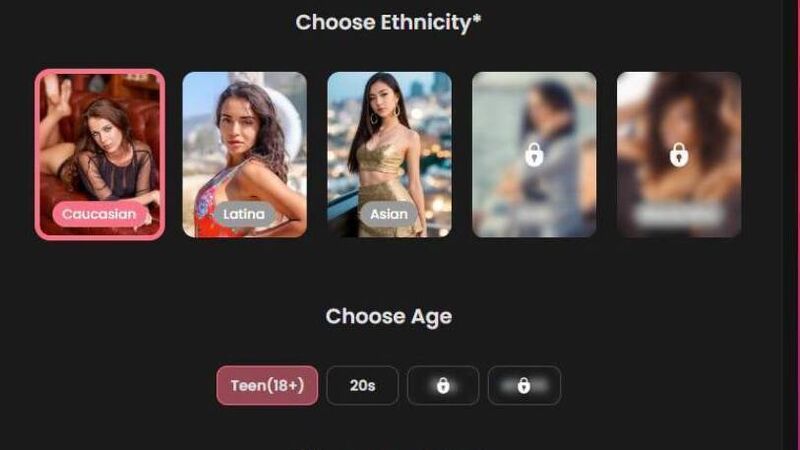

Children being 'bombarded' online by 'AI girlfriend' porn apps

Australian research has shown how some sites allow boys to digitally create scenes of women and underage girls being sexually tortured by men.

Children are being “bombarded” online by so-called AI girlfriend porn apps, which are “grooming” boys to perpetrate sexual violence and girls to accept such behaviour.

Irish children’s charities and pornography researchers say the only way to combat this growing problem is to “criminalise” porn company bosses.