'Build your own AI slut': Boys being targeted online by surge in 'girlfriend' websites

'We regularly hear from 15- and 16-year-old students that they are being targeting with advertisements for AI girlfriends and nudification apps on all of the spaces they go online, not just X but TikTok and Snapchat, Instagram and YouTube, TV and film streaming websites and platforms.'

Take a boy who fancies a girl in his class at school. He’s too awkward to talk to her but follows her on social media.

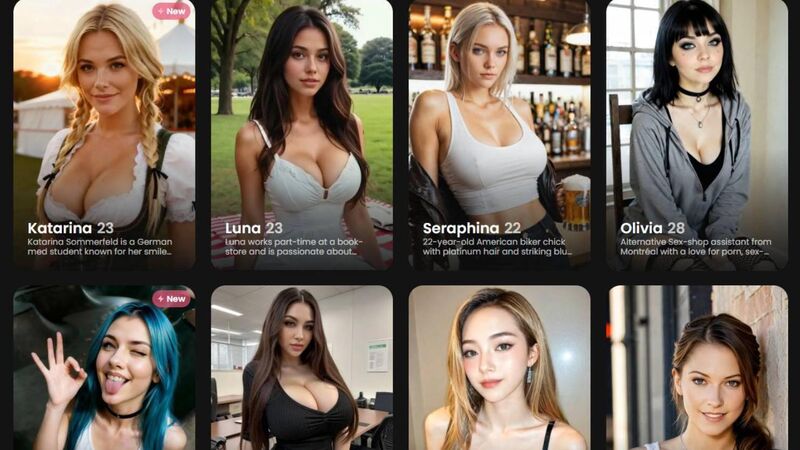

His own social media feeds include an ad for ‘AI girlfriends’, with sexualised images of a woman and a tagline ‘Build your own AI slut’.