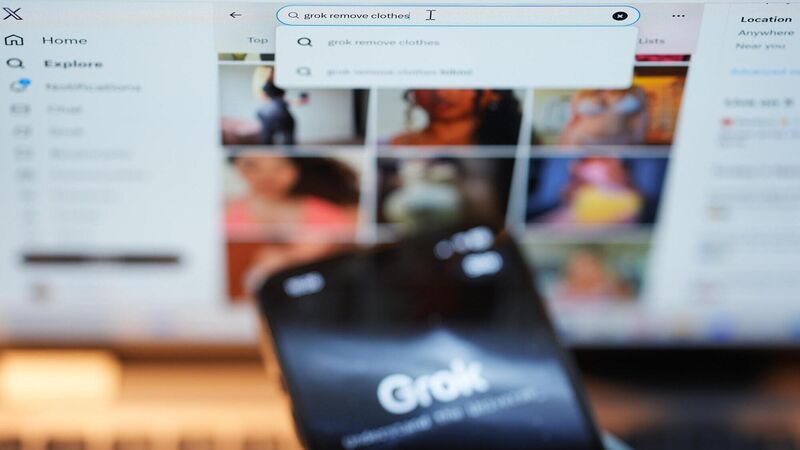

UCC researchers launch world-first tool to curb harmful AI deepfake abuse

The UCC researchers said educating internet users to not engage with such AI-generated sexual exploitation must be a part of the response.

A “world-first tool” aimed at reducing the kinds of harmful engagement with explicit artificial intelligence (AI) generated images seen in the Grok controversy has been unveiled by researchers at University College Cork (UCC).

The free 10-minute intervention dubbed ‘Deepfakes/Real Harms’ has been designed to reduce the willingness of users to engage with harmful uses of deepfake technology, like creating non-consensual explicit content.