Apple announces new safety tools to detect child sexual abuse content on iCloud

Apple has announced a trio of new child safety tools (Apple/PA)

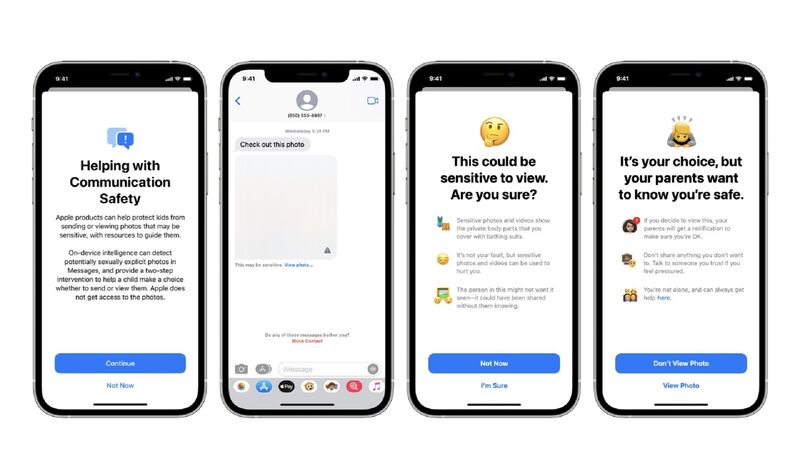

Apple has announced a trio of new child safety tools designed to protect young people and limit the spread of child sexual abuse material (CSAM).

Among the features is new technology that will allow Apple to detect known CSAM images stored in iCloud Photos and report them to law enforcement agencies.